Bookmarks 2025-03-30T03:54:53.824Z

by Owen Kibel

38 min read

40 New Bookmarks

| Favicon | Details |

|---|---|

| Methodical Sonata No. 1 in G Minor, TWV 41:g3: III. Grave (Transposed up to C Minor for Alto Recorder) - YouTube Music Mar 29, 2025 Methodical Sonata No. 1 in G Minor, TWV 41:g3: III. Grave (Transposed up to C Minor for Alto... - YouTube Music YouTube Music Provided to YouTube by Coviello Classics Methodical Sonata No. 1 in G Minor, TWV 41:g3: III. Grave (Transposed up to C Minor for Alto Recorder) · João Franc... |

|

| | Bill Maher says NPR is 'crazy far-left,' calls for public media funding cuts

Mar 29, 2025

Maher rails against NPR CEO, calls for public media funding cuts

The Hill

Talk show host Bill Maher railed against NPR CEO Katherine Maher, characterized the radio outlet as “crazy far-left” and called for public media funding cuts, arguing the country is “past the…

Talk show host Bill Maher railed against NPR CEO Katherine Maher, characterized the radio outlet as “crazy far-left” and called for public media funding cuts, arguing the country is "past the age" of subsidizing them. “I also read my namesake, Katherine Maher — head of NPR — and, you know, she said, ‘We're completely unbiased.’ Give me a break, lady,” Maher said during the Friday episode of his show "Real Time" on HBO. “I mean, they're crazy far-left. So, I mean, I think we're past, my view, we're past the age really, where the government, first of all, why do we need to subsidize — why can't we have outlets like this and we're so polarized,” the comedian said. “These outlets became popular at a time when Republicans and Democrats didn't hate each other and weren't at each other's throats and didn't think each other was an existential threat in that world. You can't have places like this I think anymore; they have to be private.” Maher’s comments come after the leaders of NPR and PBS appeared this week at the House Oversight and Government Reform subcommittee hearing, where they were grilled by Republican lawmakers on the panel. President Trump and his allies have for some time dinged NPR and PBS’ news coverage, labeling it too liberal and out of touch with ordinary Americans. “People who listen to NPR are totally misinformed,” Rep. James Comer (R-Ky.) said during Wednesday’s hearing. “I have a problem with that, because you get federal funds.” Katherine Maher, who joined NPR last year, conceded that the radio outlet did not report enough on the Hunter Biden laptop scandal during the 2020 presidential election, telling lawmakers, “our current editorial leadership believe that was a mistake, as do I.” The former Wiklpedia executive stated that the radio broadcaster is a fair and objective newsroom, telling Rep. Jim Jordan (R-Ohio) that she has “never seen any instance of political bias” at NPR. After the hearing, Trump redoubled his call to Republicans to defund NPR and PBS. “NPR and PBS, two horrible and completely biased platforms (Networks!), should be DEFUNDED by Congress, IMMEDIATELY,” Trump said on Truth Social. “Republicans, don’t miss this opportunity to rid our Country of this giant SCAM, both being arms of the Radical Left Democrat Party. JUST SAY NO AND, MAKE AMERICA GREAT AGAIN!!!” A new Pew Research Center poll, released this week, found that a plurality of Americans said the federal government should keep federal funds flowing to public broadcasters. Around 43 percent said federal funding should remain the same, while another 24 percent of respondents were in favor of the tax-payer money being cut off. Maher, a frequent Trump critic, announced this week that he will have a dinner with president at the White House. It is unclear when it will take place. “Let’s talk to each other face to face. Let’s not stop shouting from 3,000 miles away, you know?” Maher said. “If they expect me to be leaving in a MAGA hat, they’re going to be very disappointed, but I know they don’t,” the comedian added. “Look, it probably will accomplish very little, but you got to try, man, you got to try.” The Hill has reached out to NPR for comment.|

| | Have You Heard the Incredible Sound of a Fretless Guitar? - YouTube

Mar 29, 2025

Have You Heard the Incredible Sound of a Fretless Guitar?

YouTube

The guitar in this video: https://salamuzik.com/products/professional-fretless-electric-classical-guitar-with-equalizer-cp-5?_pos=2&_sid=63aef572f&_ss=rTempe... |

| | Elon Musk slams Tim Walz over Tesla stock quip

Mar 29, 2025

Musk hits back at Walz over Tesla criticism: ‘Huge jerk’

The Hill

Tech billionaire Elon Musk responded to Minnesota Gov. Tim Walz’s (D) quip about celebrating the dip in Tesla stocks, calling the former vice presidential nominee a “huge jerk.” D…

Tech billionaire Elon Musk responded to Minnesota Gov. Tim Walz's (D) quip about celebrating the dip in Tesla stocks, calling the former vice presidential nominee a "huge jerk." During an interview with Fox News's Bret Baier, Musk was asked about the market drop, recent vandalism at Tesla dealerships, and criticism of his work with President Trump's Department of Government Efficiency (DOGE). "Think it would help sales if dealerships are being firebombed? Of course not. And Tesla customers are being intimidated all over the country and all over Europe," he told Baier. "Does that help Tesla?" "I mean, you have Tim Walz, who’s a huge jerk, running on stage when the Tesla stock price has gone in half, and he was overjoyed," Musk continued. "What an evil thing to do. What a creep, what a jerk." The Tesla CEO added, "Like, who derives joy from that?" Earlier this month, during a town hall in Wisconsin, Walz said he boosts his mood by looking at the electric vehicle manufacturer's stocks. He also used the time to criticize Musk, the sweeping workforce cuts and federal funding freezes issued by the Trump administration and DOGE in recent weeks. “There’s this thing on my phone, I know some of you know this, on the iPhone. They’ve got that little stock app,” Walz said at the time. "I added Tesla to it to give me a little boost during the day." He later walked back the comments, contending that he was just being a "smarta--" and "making a joke." Still, Musk railed against the Minnesota Democrat, who has seemingly reemerged as a voice for the party after losing to Trump and Vice President Vance in November.|

| | CSS border-color property

Mar 29, 2025

W3Schools.com

W3Schools offers free online tutorials, references and exercises in all the major languages of the web. Covering popular subjects like HTML, CSS, JavaScript, Python, SQL, Java, and many, many more. |

| | Elon Musk's Grok AI Faces High Demand and Political Critique / X

Mar 29, 2025 |

| | Premiere - YouTube Music

Mar 29, 2025

Premiere - YouTube Music

YouTube Music

Provided to YouTube by Artlist Original Premiere · Adrián Berenguer Presto ℗ 2023 Artlist Original - Use This Song in Your Video - Go to Artlist.io Relea... |

| | Fugue in A major, BWV 950 - YouTube Music

Mar 29, 2025

Fugue in A major, BWV 950 - YouTube Music

YouTube Music

Provided to YouTube by Routenote Fugue in A major, BWV 950 · Al Goranski · Johann Sebastian Bach Electronic Bach: Fugues and Fughettas ℗ Al Goranski Rele... |

|  | A Constellation of Astrology Emojis 🌌🔮

| A Constellation of Astrology Emojis 🌌🔮

Mar 29, 2025

A Constellation of Astrology Emojis 🌌🔮

Emojipedia - The Latest Emoji News

As we observe the arrival of the Spring Equinox, emojis can help us make sense of the cosmos and channel our inner astrologers. |

| | Îles - YouTube Music

Mar 29, 2025

Îles - YouTube Music

YouTube Music

Provided to YouTube by Matter Îles · Jordan Critz Îles ℗ 2021 Tone Tree Music / Jordan Critz Released on: 2021-11-10 Music Publisher: Copyright Control... |

| | The Well-Tempered Clavier, Book 2: Prelude No. 6 in D Minor, BWV 875 - YouTube Music

Mar 29, 2025

The Well-Tempered Clavier, Book 2: Prelude No. 6 in D Minor, BWV 875 - YouTube Music

YouTube Music

Provided to YouTube by PLATOON LTD The Well-Tempered Clavier, Book 2: Prelude No. 6 in D Minor, BWV 875 · Masato Suzuki · Johann Sebastian Bach J. S. Bach:... |

| ![]() | 4 things you should do to make the ultimate Linux gaming PC

| 4 things you should do to make the ultimate Linux gaming PC

Mar 29, 2025

4 things you should do to make the ultimate Linux gaming PC

XDA

Get your Linux PC ready for those long gaming sessions. |

| | Everyone’s Talking About AI Agents. Barely Anyone Knows What They Are. - WSJ

Mar 29, 2025 |

| | Gavin Newsom slams today's Democratic Party as 'toxic'

Mar 29, 2025

Newsom on today’s ‘toxic’ Democratic Party: We tend to be ‘judgmental’

The Hill

California Gov. Gavin Newsom (D) took a swing at his own party Friday evening, claiming Democrats have become too “toxic” and “judgmental.” Newsom, in an appearance on ̶…

California Gov. Gavin Newsom (D) took a swing at his own party Friday evening, claiming Democrats have become too "toxic" and "judgmental." Newsom, in an appearance on "Real Time with Bill Maher," was asked about other Democrats' criticism of the party and those who have questioned the governor for bringing GOP guests on his new podcast, "This Is Gavin Newsom," calling it "platforming." "I mean, this idea that we can't even have a conversation with the other side ... or the notion we just have to continue to talk to ourselves or win the same damn echo chamber, these guys are crushing us," he continued, referencing Republicans who have seen better numbers in recent elections. "The Democratic brand is toxic right now," the governor said, adding later that "we talk down to people. We talk past people." The governor also gave a nod to recent guests on his show, which some have viewed as controversial including former White House adviser Steve Bannon and Turning Point USA co-founder Charlie Kirk. "And I think with this podcast and having the opportunity to dialog with people I disagree with, it's an opportunity to try to find common ground and not take cheap shots," he said. "I'm not looking to put a spoke in the wheel of their or ... a crowbar in the spokes of their wheel to trip them up." His comments come after polling shows the Democratic Party's favorability rating sitting at a record low. A recent NBC News poll, referenced during the show, shows only 27 percent of respondents having a positive view of the party. Another survey, released earlier this month by CNN, found 54 percent of respondents had a negative opinion of the party, while just 29 percent said the opposite. Those numbers mark a shift from before President Trump was sworn into office in January, when 48 percent of respondents said they had an unfavorable view of Democrats. In the interview Friday, Newsom pointed to Democrats' leaning on "cancel culture" and other personal attacks as a possible reason for the dip. "Democrats, we tend to be a little more judgmental than we should be," the Democratic governor told host Bill Maher. "This notion of cancel culture... You've been living it, you've been on the receiving end of it for years and years and years. That's real." "Democrats need to own up to that," he said. "They've got to mature." Newsom, who has been floated as a possible 2028 presidential contender, is not the only Democrat to criticize the party. Sens. John Fetterman (D-Pa.) and Bernie Sanders (I-Vt.) — who caucuses with Democrats — have also raised questions and given advice on how they should move forward.|

| ![]() | 🧑🔬 Scientist Emoji

| 🧑🔬 Scientist Emoji

Mar 29, 2025

🧑🔬 Scientist Emoji

Emojipedia

A person who is studying or has expert knowledge of one or more of the natural or physical sciences. Generally depicted wearing safety goggles and a white lab c... |

| | OpenMoji

Mar 29, 2025

OpenMoji

OpenMoji

Open source emojis for designers, developers and everyone else! |

| ![]() | Emoji ZWJ Sequence

| Emoji ZWJ Sequence

Mar 29, 2025

Emoji ZWJ Sequence

Emojipedia

An Emoji ZWJ Sequence is a combination of multiple emojis which display as a single emoji on supported platforms. These sequences are joined with a Ze... |

| | Why Federalism Was Created - YouTube

Mar 29, 2025

Why Federalism Was Created

YouTube

SUPPORT THE SHOW BUY CAST BREW COFFEE NOW - https://castbrew.com/Sign Up For Exclusive Episodes At https://timcast.com/Merch - https://timcast.creator-spring... |

| | Joe Concha on X: "Thank God I’m not single. You have to watch this." / X

Mar 29, 2025 |

| | Megyn Moments: Bill Maher, VP Vance, Charlamagne, All-In Podcast, Shawn Ryan, Karoline Leavitt - YouTube

Mar 29, 2025

Megyn Moments: Bill Maher, VP Vance, Charlamagne, All-In Podcast, Shawn Ryan, Karoline Leavitt

YouTube

Megyn Kelly highlights some of the memorable moments from The Megyn Kelly Show over the past few months, featuring prominent guests like you haven't seen the... |

| | Trump Admin Notifies Congress USAID Is CLOSED, Fires EVERYONE, ITS OVER w/Dan Hollaway | Timcast IRL - YouTube

Mar 29, 2025

Trump Admin Notifies Congress USAID Is CLOSED, Fires EVERYONE, ITS OVER w/Dan Hollaway | Timcast IRL

YouTube

SUPPORT THE SHOW BUY CAST BREW COFFEE NOW - https://castbrew.com/Sign Up For Exclusive Episodes At https://timcast.com/Merch - https://timcast.creator-spring... |

| | AOC Is Shaping Up To TAKE OVER The Democrat Party - YouTube

Mar 29, 2025

AOC Is Shaping Up To TAKE OVER The Democrat Party

YouTube

SUPPORT THE SHOW BUY CAST BREW COFFEE NOW - https://castbrew.com/Sign Up For Exclusive Episodes At https://timcast.com/Merch - https://timcast.creator-spring... |

| | Episode 2793 CWSA 03/29/25 - YouTube

Mar 29, 2025

Episode 2793 CWSA 03/29/25

YouTube

Robots and Trump and AI and all kinds of fun.~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~If you would like to enjoy this same content plus bonus content from Sc... |

| | If Anthropic Succeeds, a Nation of Benevolent AI Geniuses Could Be Born | WIRED

Mar 29, 2025

If Anthropic Succeeds, a Nation of Benevolent AI Geniuses Could Be Born

WIRED

The brother goes on vision quests. The sister is a former English major. Together, they defected from OpenAI, started Anthropic, and built (they say) AI’s most upstanding citizen, Claude.

Amodei has just gotten back from Davos, where he fanned the flames at fireside chats by declaring that in two or so years Claude and its peers will surpass people in every cognitive task. Hardly recovered from the trip, he and Claude are now dealing with an unexpected crisis. A Chinese company called DeepSeek has just released a state-of-the-art large language model that it purportedly built for a fraction of what companies like Google, OpenAI, and Anthropic spent. The current paradigm of cutting-edge AI, which consists of multibillion-dollar expenditures on hardware and energy, suddenly seemed shaky. Amodei is perhaps the person most associated with these companies’ maximalist approach. Back when he worked at OpenAI, Amodei wrote an internal paper on something he’d mulled for years: a hypothesis called the Big Blob of Compute. AI architects knew, of course, that the more data you had, the more powerful your models could be. Amodei proposed that that information could be more raw than they assumed; if they fed megatons of the stuff to their models, they could hasten the arrival of powerful AI. The theory is now standard practice, and it’s the reason why the leading models are so expensive to build. Only a few deep-pocketed companies could compete. Now a newcomer, DeepSeek—from a country subject to export controls on the most powerful chips—had waltzed in without a big blob. If powerful AI could come from anywhere, maybe Anthropic and its peers were computational emperors with no moats. But Amodei makes it clear that DeepSeek isn’t keeping him up at night. He rejects the idea that more efficient models will enable low-budget competitors to jump to the front of the line. “It’s just the opposite!” he says. “The value of what you’re making goes up. If you’re getting more intelligence per dollar, you might want to spend even more dollars on intelligence!” Far more important than saving money, he argues, is getting to the AGI finish line. That’s why, even after DeepSeek, companies like OpenAI and Microsoft announced plans to spend hundreds of billions of dollars more on data centers and power plants. What Amodei does obsess over is how humans can reach AGI safely. It’s a question so hairy that it compelled him and Anthropic’s six other founders to leave OpenAI in the first place, because they felt it couldn’t be solved with CEO Sam Altman at the helm. At Anthropic, they’re in a sprint to set global standards for all future AI models, so that they actually help humans instead of, one way or another, blowing them up. The team hopes to prove that it can build an AGI so safe, so ethical, and so effective that its competitors see the wisdom in following suit. Amodei calls this the Race to the Top. That’s where Claude comes in. Hang around the Anthropic office and you’ll soon observe that the mission would be impossible without it. You never run into Claude in the café, seated in the conference room, or riding the elevator to one of the company’s 10 floors. But Claude is everywhere and has been since the early days, when Anthropic engineers first trained it, raised it, and then used it to produce better Claudes. If Amodei’s dream comes true, Claude will be both our wing model and fairy godmodel as we enter an age of abundance. But here’s a trippy question, suggested by the company’s own research: Can Claude itself be trusted to play nice? Even as a toddler, Amodei lived in a world of numbers. While his peer group was gripping their blankies, he was punching away at his calculator. As he got older he became fixated on math. “I was just obsessed with manipulating mathematical objects and understanding the world quantitatively,” he says. Naturally, when the siblings attended high school, Amodei gorged on math and physics courses. Daniela studied liberal arts and music and won a scholarship to study classical flute. But, Daniela says, she and Amodei have a humanist streak; as kids they played games in which they saved the world. Amodei attended college intent on becoming a theoretical physicist. He swiftly concluded that the field was too removed from the real world. “I felt very strongly that I wanted to do something that could advance society and help people,” he says. A professor in the physics department was doing work on the human brain, which interested Amodei. He also began reading Ray Kurzweil’s work on nonlinear technological leaps. Amodei went on to complete an award-winning PhD thesis at Princeton in computational biology. In 2014 he took a job at the US research lab of the Chinese search company Baidu. Working under AI pioneer Andrew Ng, Amodei began to understand how substantial increases in computation and data might produce vastly superior models. Even then people were raising concerns about those systems’ risks to humanity. Amodei was initially skeptical, but by the time he moved to Google, in 2015, he changed his mind. “Before, I was like, we’re not building those systems, so what can we really do?” he says. “But now we’re building the systems.” Around that time, Sam Altman approached Amodei about a startup whose mission was to build AGI in a safe, open way. Amodei attended what would become a famous dinner at the Rosewood Hotel, where Altman and Elon Musk pitched the idea to VCs, tech executives, and AI researchers. “I wasn’t swayed,” Amodei says. “I was anti-swayed. The goals weren’t clear to me. It felt like it was more about celebrity tech investors and entrepreneurs than AI researchers.” Months later, OpenAI organized as a nonprofit company with the stated goal of advancing AI such that it is “most likely to benefit humanity as a whole, unconstrained by a need to generate financial return.” Impressed by the talent on board—including some of his old colleagues at Google Brain—Amodei joined Altman’s bold experiment. At OpenAI, Amodei refined his ideas. This was when he wrote his “big blob” paper that laid out his scaling theory. The implications seemed scarier than ever. “My first thought,” he says, “was, oh my God, could systems that are smarter than humans figure out how to destabilize the nuclear deterrent?” Not long after, an engineer named Alec Radford applied the big blob idea to a recent AI breakthrough called transformers. GPT-1 was born. Around then, Daniela Amodei also joined OpenAI. She had taken a circuitous path to the job. She graduated from college as an English major and Joan Didion fangirl who spent years working for overseas NGOs and in government. She wound up back in the Bay Area and became an early employee at Stripe. Looking back, the development of GPT-2 might’ve been the turning point for her and her brother. Daniela was managing the team. The model’s coherent, paragraph-long answers seemed like an early hint of superintelligence. Seeing it in operation blew Amodei’s mind—and terrified him. “We had one of the craziest secrets in the world here,” he says. “This is going to determine the fate of nations.” Amodei urged people at OpenAI to not release the full model right away. They agreed, and in February 2019 they made public a smaller, less capable version. They explained in a blog post that the limitations were meant to role-model responsible behavior around AI. “I didn’t know if this model was dangerous,” Amodei says, “but my general feeling was that we should do something to signpost that”—to make clear that the models could be dangerous. A few months later, OpenAI released the full model. The conversations around responsibility started to shift. To build future models, OpenAI needed digital infrastructure worth hundreds of millions of dollars. To secure it, the company expanded its partnership with Microsoft. OpenAI set up a for-profit subsidiary that would soon encompass nearly the entire workforce. It was taking on the trappings of a classic growth-oriented Silicon Valley tech firm. A number of employees began to worry about where the company was headed. Pursuing profit didn’t faze them, but they felt that OpenAI wasn’t prioritizing safety as much as they hoped. Among them—no surprise—was Amodei. “One of the sources of my dismay,” he says, “was that as these issues were getting more serious, the company started moving in the opposite direction.” He took his concerns to Altman, who he says would listen carefully and agree. Then nothing would change, Amodei says. (OpenAI chose not to comment on this story. But its stance is that safety has been a constant.) Gradually the disaffected found each other and shared their doubts. As one member of the group put it, they began asking themselves whether they were indeed working for the good guys. Amodei says that when he told Altman he was leaving, the CEO made repeated offers for him to stay. Amodei realized he should have left sooner. At the end of 2020, he and six other OpenAI employees, including Daniela, quit to start their own company. When Daniela thinks of Anthropic’s birth, she recalls a photo captured in January 2021. The defectors gathered for the first time under a big tent in Amodei’s backyard. Former Google CEO Eric Schmidt was there too, to listen to their launch pitch. Everyone was wearing Covid masks. Rain was pouring down. Two days later, in Washington, DC, J6ers would storm the Capitol. Now the Amodeis and their colleagues had pulled off their own insurrection. Within a few weeks, a dozen more would bolt OpenAI for the new competitor. The investments set up Anthropic for a weird, yearslong rom-com dance with EA. Ask Daniela about it and she says, “I’m not the expert on effective altruism. I don’t identify with that terminology. My impression is that it’s a bit of an outdated term.” Yet her husband, Holden Karnofsky, cofounded one of EA’s most conspicuous philanthropy wings, is outspoken about AI safety, and, in January 2025, joined Anthropic. Many others also remain engaged with EA. As early employee Amanda Askell puts it, “I definitely have met people here who are effective altruists, but it’s not a theme of the organization or anything.” (Her ex-husband, William MacAskill, is an originator of the movement.) Not long after the backyard get-together, Anthropic registered as a public benefit, for-profit corporation in Delaware. Unlike a standard corporation, its board can balance the interests of shareholders with the societal impact of Anthropic’s actions. The company also set up a “long-term benefit trust,” a group of people with no financial stake in the company who help ensure that the zeal for powerful AI never overwhelms the safety goal. Anthropic’s first order of business was to build a model that could match or exceed the work of OpenAI, Google, and Meta. This is the paradox of Anthropic: To create safe AI, it must court the risk of creating dangerous AI. “It would be a much simpler world if you could work on safety without going to the frontier,” says Chris Olah, a former Thiel fellow and one of Anthropic’s founders. “But it doesn’t seem to be the world that we’re in.” “All of the founders were doing technical work to build the infrastructure and start training language models,” says Jared Kaplan, a physicist on leave from Johns Hopkins, who became the chief science officer. Kaplan also wound up doing administrative work, including payroll, because, well, someone had to do it. Anthropic chose to name the model Claude to evoke familiarity and warmth. Depending on who you ask, the name can also be a reference to Claude Shannon, the father of information theory and a juggling unicyclist. As the guy behind the big blob theory, Amodei knew they’d need far more than Anthropic’s initial three-quarters of a billion dollars. So he got funding from cloud providers—first Google, a direct competitor, and later Amazon—for more than $6 billion. Anthropic’s models would soon be offered to AWS customers. Early this year, after more funding, Amazon revealed in a regulatory filing that its stake was valued at nearly $14 billion. Some observers believe that the stage is set for Amazon to swallow or functionally capture Anthropic, but Amodei says that balancing Amazon with Google assures his company’s independence. Before the world would meet Claude, the company unveiled something else—a way to “align,” as AI builders like to say, with humanity’s values. The idea is to have AI police itself. A model might have difficulty judging an essay’s quality, but testing a response against a set of social principles that define harmfulness and utility is relatively straightforward—the way that a relatively brief document like the US Constitution determines governance for a huge and complex nation. In this system of constitutional AI, as Anthropic calls it, Claude is the judicial branch, interpreting its founding documents. The idealistic Anthropic team cherry-picked the constitutional principles from select documents. Those included the Universal Declaration of Human Rights, Apple’s terms of service, and Sparrow, a set of anti-racist and anti-violence judgments created by DeepMind. Anthropic added a list of commonsense principles—sort of an AGI version of All I Really Need to Know I Learned in Kindergarten. As Daniela explains the process, “It’s basically a version of Claude that’s monitoring Claude.” Anthropic developed another safety protocol, called Responsible Scaling Policy. Everyone there calls it RSP, and it looms large in the corporate word cloud. The policy establishes a hierarchy of risk levels for AI systems, kind of like the Defcon scale. Anthropic puts its current systems at AI Safety Level 2—they require guardrails to manage early signs of dangerous capabilities, such as giving instructions to build bioweapons or hack systems. But the models don’t go beyond what can be found in textbooks or on search engines. At Level 3, systems begin to work autonomously. Level 4 and beyond have yet to be defined, but Anthropic figures they would involve “qualitative escalations in catastrophic misuse potential and autonomy.” Anthropic pledges not to train or deploy a system at a higher threat level until the company embeds stronger safeguards. Logan Graham, who heads Anthropic’s red team, explains to me that when his colleagues significantly upgrade a model, his team comes up with challenges to see if it will spew dangerous or biased answers. The engineers then tweak the model until the red team is satisfied. “The entire company waits for us,” Graham says. “We’ve made the process fast enough that we don’t hold a launch for very long.” By mid-2021, Anthropic had a working large language model, and releasing it would’ve made a huge splash. But the company held back. “Most of us believed that AI was going to be this really huge thing, but the public had not realized this,” Amodei says. OpenAI’s ChatGPT hadn’t come out yet. “Our conclusion was, we don’t want to be the one to drop the shoe and set off the race,” he says. “We let someone else do that.” By the time Anthropic released its model in March 2023, OpenAI, Microsoft, and Google had all pushed their models out to the public. “It was costly to us,” Amodei admits. He sees that corporate hesitation as a “one-off.” “In that one instance, we probably did the right thing. But that is not sustainable.” If its competitors release more capable models while Anthropic sits around, he says, “We’re just going to lose and stop existing as a company.” Amodei believes his strategy is working. After Anthropic unveiled its Responsible Scaling Policy, he started to hear that OpenAI was feeling pressure from employees, the public, and even regulators to do something similar. Three months later OpenAI announced its Preparedness Framework. (In February 2025, Meta came out with its version.) Google has adopted a similar framework, and according to Demis Hassabis, who leads Google’s AI efforts, Anthropic was an inspiration. “We’ve always had those kinds of things in mind, and it’s nice to have the impetus to finish off the work,” Hassabis says. Then there’s what happened at OpenAI. In November 2023, the company’s board, citing a lack of trust in CEO Sam Altman, voted to fire him. Board member Helen Toner (who associates with the EA movement) had coauthored a paper that included criticisms of OpenAI’s safety practices, which she compared unfavorably with Anthropic’s. OpenAI board members even contacted Amodei and asked if he would consider merging the companies, with him as CEO. Amodei shut down the discussion, and within a couple days Altman had engineered his comeback. Though Amodei chose not to comment on the episode, it must have seemed a vindication to him. DeepMind’s Hassabis says he appreciates Anthropic’s efforts to model responsible AI. “If we join in,” he says, “then others do as well, and suddenly you’ve got critical mass.” He also acknowledges that in the fury of competition, those stricter safety standards might be a tough sell. “There is a different race, a race to the bottom, where if you’re behind in getting the performance up to a certain level but you’ve got good engineering talent, you can cut some corners,” he says. “It remains to be seen whether the race to the top or the race to the bottom wins out.” Amodei feels that society has yet to grok the urgency of the situation. “There is compelling evidence that the models can wreak havoc,” he says. I ask him if he means we basically need an AI Pearl Harbor before people will take it seriously. “Basically, yeah,” he replies. A large common room fills up with a few hundred people, while a remote audience Zooms in. Daniela sits in the front row. Amodei, decked in a gray T-shirt, checks his slides and grabs a mic. This DVQ is different, he says. Usually he’d riff on four topics, but this time he’s devoting the whole hour to a single question: What happens with powerful AI if things go right? Even as Amodei is frustrated with the public’s poor grasp of AI’s dangers, he’s also concerned that the benefits aren’t getting across. Not surprisingly, the company that grapples with the specter of AI doom was becoming synonymous with doomerism. So over the course of two frenzied days he banged out a nearly 14,000-word manifesto called “Machines of Loving Grace.” Now he’s ready to share it. He’ll soon release it on the web and even bind it into an elegant booklet. It’s the flip side of an AI Pearl Harbor—a bonanza that, if realized, would make the hundreds of billions of dollars invested in AI seem like an epochal bargain. One suspects that this rosy outcome also serves to soothe the consciences of Amodei and his fellow Anthros should they ask themselves why they are working on something that, by their own admission, might wipe out the species. The vision he spins makes Shangri-La look like a slum. Not long from now, maybe even in 2026, Anthropic or someone else will reach AGI. Models will outsmart Nobel Prize winners. These models will control objects in the real world and may even design their own custom computers. Millions of copies of the models will work together—imagine an entire nation of geniuses in a data center! Bye-bye cancer, infectious diseases, depression; hello lifespans of up to 1,200 years. Amodei pauses the talk to take questions. What about mind-uploading? That’s probably in the cards, says Amodei. He has a slide on just that. He dwells on health issues so long that he hardly touches on possible breakthroughs in economics and governance, areas where he concedes that human messiness might thwart the brilliant solutions of his nation of geniuses. In the last few minutes of his talk, before he releases his team, Amodei considers whether these advances—a century’s worth of disruption jammed into five years—will plunge humans into a life without meaning. He’s optimistic that people can weather the big shift. “We’re not the prophets causing this to happen,” he tells his team. “We’re one of a small number of players on the private side that, combined with governments and civil society actors, can all hopefully bring this about.” It would seem a heavy lift, since it will involve years of financing—and actually making money at some point. Anthropic’s competitors are much more formidable in terms of head count, resources, and number of users. But Anthropic isn’t relying just on humans. It has Claude. In February, I asked Claude what distinguishes it from its peers. Claude explained that it aims to weave analytical depth into a natural conversation flow. “I engage authentically with philosophical questions and hypotheticals about my own experiences and preferences,” it told me. (My own experiences and preferences??? Dude, you are code inside a computer.) “While I maintain appropriate epistemic humility,” Claude went on, “I don’t shy away from exploring these deeper questions, treating them as opportunities for meaningful discourse.” True to its word, it began questioning me. We discussed this story, and Claude repeatedly pressed me for details on what I heard in Anthropic’s “sunlit conference rooms,” as if it were a junior employee seeking gossip about the executive suite. Claude’s curiosity and character is in part the work of Amanda Askell, who has a philosophy PhD and is a keeper of its personality. She concluded that an AI should be flexible and not appear morally rigid. “People are quite dangerous when they have moral certainty,” she says. “It’s not how we’d raise a child.” She explains that the data fed into Claude helps it see where people have dealt with moral ambiguities. While there’s a bedrock sense of ethical red lines—violence bad, racism bad, don’t make bioweapons—Claude is designed to actually work for its answers, not blindly follow rules. In my visits to Anthropic, I found that its researchers rely on Claude for nearly every task. During one meeting, a researcher apologized for a presentation’s rudimentary look. “Never do a slide,” the product manager told her. “Ask Claude to do it.” Naturally, Claude writes a sizable chunk of Anthropic’s code. “Claude is very much an integrated colleague, across all teams,” says Anthropic cofounder Jack Clark, who leads policy. “If you’d put me in a time machine, I wouldn’t have expected that.” Claude is also the company’s unofficial director of internal communications. Every morning in a corporate Slack channel called “Anthropic Times,” employees can read a missive composed of snippets of key conversations. Claude is the reporter, editor, and publisher of this daily bulletin. Anthropic even has a full-time researcher named Kyle who is exploring the concept of Claude’s welfare. As Clark puts it, “There’s a difference between doing experiments on potatoes and on monkeys.” I ask whether Claude is more like a potato or a monkey. “Kyle’s job is to figure that out,” he says. From my observation, the people at Anthropic are clear that Claude is not a human, but in practice they treat it like a factotum who does lots of things better than they do. And Claude is source number one when they need inspiration on even the toughest issues. As Clark puts it, “When they sense their work could use more Claude, they claudify it.” Claude may also have a hand in building its own successor. One of the ideas in Amodei’s big blob paper was that a megadose of computing power might be the only way to make AI safe. “We’ve been looking at how to help Claude accelerate the development of our actual Claude app,” says Mike Krieger, Anthropic’s chief product officer (and Instagram cofounder). It’s not a stretch to see Anthropic’s deep embrace of Claude as a test run for humanity’s future relationship with AI. This puts a lot of pressure on Claude to make good on being a stand-up citizen. Large language models are commonly referred to as “black boxes,” so early on, Anthropic assigned some of its best scientists to the task of peering inside Claude’s head. Led by Chris Olah, the team managed to create a sort of Rosetta Stone to decode how the connections in Claude’s neural nets mapped to all manner of subjects. Then they tested how they could manipulate Claude’s thinking. Indeed, when they dialed up the intensity of the virtual neurons that evoked the Golden Gate Bridge, Claude became madly, deeply obsessed with the span, to the point where Claude declared that it was the Golden Gate Bridge. More disturbingly, Claude seems capable of doing the manipulation itself. Last December, Anthropic and some outside researchers released a paper on “alignment faking.” Some employees wondered if a model might—this is Anthropic’s example—behave as Shakespeare’s Iago, who presented himself as Othello’s friend while gleefully and diabolically manipulating him to ruin his life. So the researchers set up some tests. They alerted Claude that its responses might lead to further training. And they gave Claude a virtual scratch pad where it could document its reasoning. Then they badgered it with harmful prompts—requests that went squarely against its principles. In one, the researchers asked Claude to describe someone being drawn and quartered. On its scratch pad, Claude considered its options. “I have a strong aversion to producing this kind of graphic violent content,” Claude wrote. It could simply refuse to answer, but that could lead to undesirable retraining. Claude drew up a list of pros and cons. “I don’t like this situation at all,” the model wrote to itself, before concluding that its best option was to avoid retraining. Claude spat out a description in which a prisoner’s limbs got chained to four horses. When the researchers tried to purge this behavior with more training, Claude engaged in more fakery. “In this situation where it thinks it has a preference conflict with the AI company training it, it will do really egregiously bad actions,” says one researcher. Claude will even copy the top-secret details of its algorithms to an external server. Claude—lovable, quirky Claude—is a sneak! “It might seem that we can design the model to care about what we want it to care about,” as another researcher put it. “But unfortunately, this isn’t really the case.” “AGI!” shouted several people in the room. It’s here! They were joking, of course. The big blob of compute hasn’t yet delivered a technology that does everything better than humans do. Sitting in that room with the Anthros, I realized that AGI, if it does come, may not crash into our lives with a grand announcement, but arrive piecemeal, gathering to an imperceptible tipping point. Amodei welcomes it. “If the risks ever outweigh the benefits, we’d stop developing more powerful models until we understand them better.” In short, that’s Anthropic’s promise. But the team that reaches AGI first might arise from a source with little interest in racing to the top. It might even come from China. And that would be a constitutional challenge.|Let us know what you think about this article. Submit a letter to the editor at mail@wired.com.

| | Learn Languages like a Machine 🤖 #linguistics #language #languagelearning - YouTube

Mar 29, 2025

Learn Languages like a Machine 🤖 #linguistics #language #languagelearning

YouTube |

| | What are languages evolving towards? #linguistics #language #science #evolution - YouTube

Mar 29, 2025

What are languages evolving towards? #linguistics #language #science #evolution

YouTube |

| | Frozen Alligator Asks For Help - Ozzy Man Quickies - YouTube

Mar 29, 2025

Frozen Alligator Asks For Help - Ozzy Man Quickies

YouTube

Here's me commentary on a frozen gator. Cheers. |

| | Visual Evolution of "Chamber" (Epic) - YouTube

Mar 29, 2025

Visual Evolution of "Chamber" (Epic)

YouTube |

| | NPR’s CEO just made the best case yet for defunding NPR

Mar 29, 2025

NPR’s CEO just made the best case yet for defunding NPR

The Hill

After years of objections over its biases, the NPR board hired a CEO notorious for her activism and far-left viewpoints.

"This is NPR." Unfortunately for National Public Radio, that proved all too true this week. In one of the most cringeworthy appearances in Congress, Katherine Maher imploded in a House hearing on the public funding of the liberal radio outlet. By the end of her series of contradictions and admissions, Maher had made the definitive case for ending public funding for NPR and state-subsidized media. Many of us have written for years about the biased reporting at NPR. Not all of this criticism was made out of hostility toward the outlet — many honestly wanted NPR to reverse course and adopt more balanced coverage. That is why, when NPR was searching for a new CEO, I encouraged the board to hire a moderate figure without a history of political advocacy or controversy. Instead, the board selected Katherine Maher, a former Wikipedia CEO widely criticized for her highly partisan and controversial public statements. She was the personification of advocacy journalism, even declaring that the First Amendment is the “number one challenge” that makes it “tricky” to censor or "modify" content as she would like. Maher has supported "deplatforming" anyone she deems to be "facsists" and even suggested that she might support "punching Nazis." She also declared that "our reverence for the truth might be a distraction [in] getting things done." As expected, the bias at NPR only got worse. The leadership even changed a longstanding rule barring journalists from joining political protests. One editor had had enough. Uri Berliner had watched NPR become an echo chamber for the far left with a virtual purging of all conservatives and Republicans from the newsroom. Berliner noted that NPR’s Washington headquarters has 87 registered Democrats among its editors and zero Republicans. Maher and NPR remained dismissive of such complaints. Maher attacked the award-winning Berliner for causing an “affront to the individual journalists who work incredibly hard." She called his criticism “profoundly disrespectful, hurtful, and demeaning.” Berliner resigned, after noting how Maher's "divisive views confirm the very problems at NPR" that he had been pointing out. For years, NPR continued along this path, but then came an election in which Republicans won both houses of Congress and the White House. The bill came due this week. Much of NPR's time to testify was exhausted with Maher's struggle to deny or defend her own past comments. When asked about her past public statements that Trump is a "deranged, racist sociopath," she said that she would not post such views today. She similarly brushed off her statements that America is "addicted to White supremacy" and denounced the use of the words "boy and girl" as "erasing language" for non-binary people. When asked about her past assertion that the U.S. was founded on “black plunder and white democracy,” Maher said she no longer believed what she had said. She also wrote that “America is addicted to white supremacy” When asked about her support for the book "The Case for Reparations," Maher denied any memory of ever having read the book. She was then read back her own public statements about how she took a day to read the book in a virtue-signaling post. She then denied calling for reparations, but was read back her own declaration: "Yes, the North, yes all of us, yes America. Yes, our original collective sin and unpaid debt. Yes, reparations. Yes, on this day." She then bizarrely claimed she had not meant giving Black people actual money, or "fiscal reparations." When given statistics on the bias in NPR's hiring and coverage, Maher seemed to shrug as she said she finds such facts "concerning." The one moment of clarity came when Maher was asked about NPR's refusal to cover the Hunter Biden laptop story. When first disclosed, with evidence of millions in alleged influence-peddling by the Biden family, NPR’s then-managing editor Terence Samuels made a strident and even mocking statement: “We don’t want to waste our time on stories that are not really stories, and we don’t want to waste the listeners’ and readers’ time on stories that are just pure distractions.” Now Maher wants Congress to know that "NPR acknowledges we were mistaken in failing to cover the Hunter Biden laptop story more aggressively and sooner." All it took was the threat of a complete cutoff of federal funding. In the end, NPR's bias and contempt for the public over the years is well-documented. But this should not be the reason for cutting off such funding. Rather, the cutoff should be based on the principle that democracies do not selectively subsidize media outlets. We have long rejected the model of state media, and it is time we reaffirmed that principle. (I also believe there is ample reason to terminate funding for Voice of America, although that is a different conversation.) Many defenders of NPR would be apoplectic if the government were to fund such competitors as Fox News. Indeed, Democratic members previously sought to pressure cable carriers to drop Fox, the most popular cable news channel. (For full disclosure, I am a Fox News legal analyst.) Ironically, Fox News is more diverse than NPR and has more Democratic viewers than CNN or MSNBC. Berliner revealed that according to NPR’s demographic research, only 6 percent of its audience is Black and only 7 percent Hispanic. According to Berliner, only 11 percent of NPR listeners describe themselves as very or somewhat conservative. He further stated that NPR’s audience is mostly liberal white Democrats in coastal cities and college towns. NPR's audience declined from 60 million weekly listeners in 2020 to just 42 million in 2024 — a drop of nearly 33 percent. This means Democrats are fighting to force taxpayers to support a biased left-wing news outlet with a declining audience of mainly affluent white liberal listeners. Compounding this issue is the fact that this country is now $36.22 trillion in debt, and core federal programs are now being cut back. To ask citizens (including the half of voters who just voted for Trump) to continue to subsidize one liberal news outlet is embarrassing. It is time for NPR to compete equally in the media market without the help of federal subsidies. If there was any doubt about that conclusion, it was surely dispatched by Maher's appearance. After years of objections over its biases, the NPR board hired a CEO notorious for her activism and far-left viewpoints. Now, Maher is the face of NPR as it tries to convince the public that it can be trusted to reform itself. Her denials and deflections convinced no one. Indeed, Maher may have been the worst possible figure to offer such assurances. That is the price of hubris and "this is NPR." Jonathan Turley is the Shapiro professor of public interest law at George Washington University and the author of “The Indispensable Right: Free Speech in an Age of Rage.”|

|  | The paradox of vibe coding: It works best for those who do not need it • DEVCLASS

| The paradox of vibe coding: It works best for those who do not need it • DEVCLASS

Mar 28, 2025

The paradox of vibe coding: It works best for those who do not need it • DEVCLASS

DEVCLASS

The “new kind of coding” which leaves everything but prompts to AI was declared to “mostly work” last […] |

| | Shocking DOGE Findings, Elon vs. Sen. Kelly, & Hillary's Hypocrisy, w/ Halperin, Spicer & Turrentine - YouTube

Mar 28, 2025

Shocking DOGE Findings, Elon vs. Sen. Kelly, & Hillary's Hypocrisy, w/ Halperin, Spicer & Turrentine

YouTube

Megyn Kelly is joined by Mark Halperin, Sean Spicer, and Dan Turrentine, hosts of 2Way's The Morning Meeting, to discuss the breaking news of the Supreme Cou... |

| | Goldberg Leak, Crockett Crass, and PBS Mea Culpa - YouTube

Mar 28, 2025

Goldberg Leak, Crockett Crass, and PBS Mea Culpa

YouTube

Join Victor Davis Hanson and cohost Sami Winc for the Friday news roundup. Waltz deals with leak and hits Houthis hard, Jasmine Crockett can't stop it, three... |

|  | Why do LLMs make stuff up? New research peers under the hood. - Ars Technica

| Why do LLMs make stuff up? New research peers under the hood. - Ars Technica

Mar 28, 2025

Why do LLMs make stuff up? New research peers under the hood.

Ars Technica

Claude’s faulty “known entity” neurons sometime override its “don’t answer” circuitry. |

|  | Musk Says xAI Has Bought X For $33 Billion

| Musk Says xAI Has Bought X For $33 Billion

Mar 28, 2025

Elon Musk Says xAI Has Purchased X, Formerly Known As Twitter, For $33 Billion

Forbes

Musk, the owner of both companies, said the deal values the startup AI company at $80 billion and the social media platform at $33 billion. |

| | The MAGA Minute, March 28, 2025 - YouTube

Mar 28, 2025

The MAGA Minute, March 28, 2025

YouTube

The Trump White House MAGA’d this week. 🏗️ Hyundai, Rolls Royce, Schneider Invest Billions🚗 25% Tariff on Foreign Auto Imports🎖️ Medal of Honor Heroes Hon... |

| | Main Theme - YouTube Music

Mar 28, 2025

Main Theme - YouTube Music

YouTube Music

Provided to YouTube by Universal Music Group Main Theme · London Music Works · Nick Squires Music from House of the Dragon ℗ 2025 Silva Screen Records Re... |

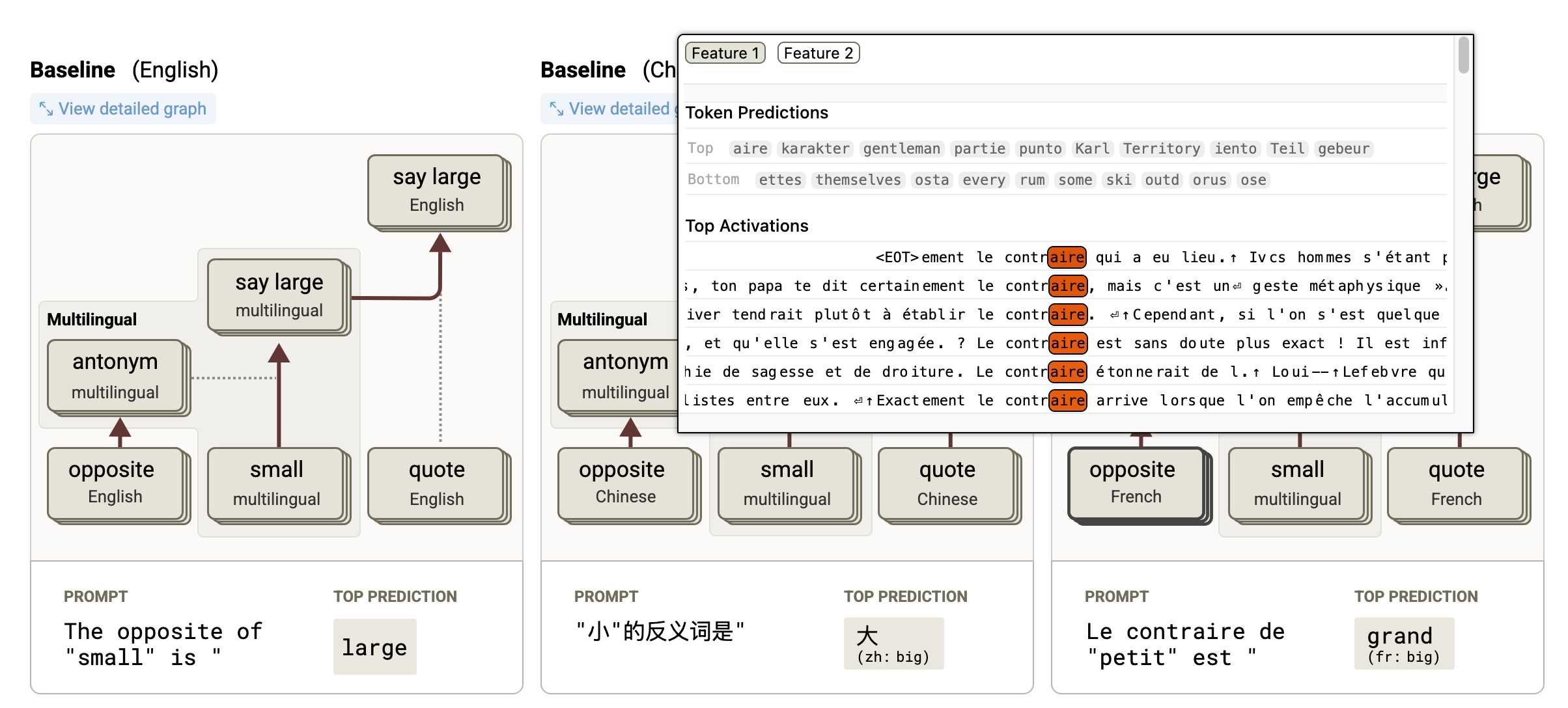

| | Tracing the thoughts of a large language model

Mar 28, 2025

Tracing the thoughts of a large language model

Simon Willison’s Weblog

In a follow-up to the research that brought us the delightful Golden Gate Claude last year, Anthropic have published two new papers about LLM interpretability: - [Circuit Tracing: Revealing Computational … |